About this Guest Post

Linda Berube is no stranger to using web services to transform public libraries. As a regional manager for e-services and e-procurement, she not only oversaw the distributed interoperability of library management systems, but also created and managed the implementation of a co-operative national virtual reference service, the People’s Network Enquire. She currently coordinates and advises on policy, research, and project work for the Legal Deposit Advisory Panel, a non-departmental government body charged by the UK Secretary of State to make recommendations on regulations for the legal deposit of digital resources. She can be contacted at: ljberube@googlemail.com

If a Tree Falls in the Forest – and other thoughts on Web 2.0 Evaluation (pt.1)

A few things caught my eye on the way to writing this guest blog for UKOLN:

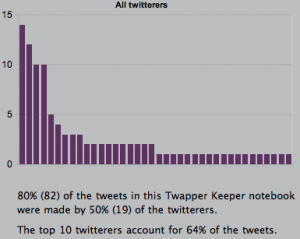

• The announcement that the Library of Congress will archive Tweets

• “Professor of War,” a Vanity Fair article reviewing the career of General David Petraeus, Commander of US Central Command. Of particular relevance was his father’s exhortation, “results, boy, results.”

• A discussion with a US librarian regarding how blogs can be evaluated absent any response posts from members of the public. (Hence, the title of this blog—if someone writes a blog and there is no response to posts, is it being read? Er, or something like that…)

What has any of this to do with evaluating the impact of Web 2.0 in libraries? In a way, they point to the key questions – what, why, and how – of any service development, Web 2.0-based or otherwise, the answers to which should provide the objectives for evaluation, not as a separate activity, but one that is integral to the service from the beginning.

As one who started some years ago to encourage public librarians to look at Web 2.0 services, (for example see my bit of technology forecasting for the Laser Foundation in 2005, On the Road Again), the process of writing a book on the subject (Do You Web 2.0?) afforded me the opportunity to talk with a number of librarians from the UK, US, and Canada, not only about the services themselves, but also their thoughts on impact and how it is evaluated. While I found many excellent examples of Web 2.0 services, I also encountered something called ‘the evaluation by-pass’. I like to refer to this as simply ‘the evaluation pass,’ as in “Evaluation? We took a pass on that for now. It’s early days, after all.” (for more on the evaluation by pass, see Booth, A (2007). “Blogs, wikis, and podcasts: the ‘evaluation by pass’ in action?” Health Information Information and Libraries Journal 24, pp298-302.)

I have had long, heartfelt email exchanges with librarians about how they know they should be evaluating, how they would if they could, how just doing it (Web 2.0) has been satisfactory enough etcetcetc. Reasons often cited as mitigating factors for not evaluating include staff capacity; lack of motivation and/or support on the part of front-line staff or senior management; and simply not knowing what or how to evaluate Web 2.0-based services.

My impression regarding these reasons, and especially this last, is that quite a few librarians have embarked on experimenting with Web 2.0 without a service mindset. So, before we consider how impact might be evaluated, some observations on ‘why’ are in order.

The Twitter Factor

Because the technology is low-to-no cost, quite a few librarians have given into the temptation ‘to experiment’ with Web 2.0, thus setting themselves up for a common enough trap: high expectation meets low return. Librarians might say they don’t have high expectations when they start using these tools, but when blog posts are met with deafening silence, or when no one wants to be a ‘Friend’ or ‘Follower’ or ‘Fan’ of the library’s on a social networking site, such as Twitter or Facebook, it’s hard not to feel rejected and to turn this bitterness against the technology. (“It works for some libraries, just not for ours.”)

I think a great deal of expectation has been cranked up about these tools in general, and librarians have certainly felt the peer pressure. The amount of publicity a service like Twitter gets, especially with regard to the value of its data whether it be commercial or scholarly, compels librarians to think about trying it. And, Twitter seemed to have caught on overnight, growing exponentially, making the quick win of instant attention derived just by signing up within everyone’s grasp. Essentially, all a librarian has to do is set up a Twitter account, put out a few Tweets and the public response will be instantaneous.

Results, Boy, Results

I understand the pressure exerted to try this new technology, and think that a little experimentation is a good thing. But expectations are no substitute for even the most minimal planning that focuses on objectives and outcomes, regardless of whether a library is just experimenting, testing proof of concept, or launching a live service. In various publications about the evaluation process, a common first step is to answer the question “why?”— in other words, knowing the purpose of evaluation will often identify the necessary method for collecting data.

However, “why” should be asked at the very inception of a service, way before it is implemented—why are we doing this? Answers to this question should provide the basis for the service: its objectives, how it will be delivered (technology), and how success will be measured. For evaluation should not start after the service has been up and running for a while, and it should not be reactive (to stave off threats of budget cuts, or awkward questions from senior management etc). The gathering of the required data should start from the first day of implementation and should be ongoing, as a matter of course.

This is just plain good service sense, whether that service is a homework help club, a book group, an online catalogue, or a Facebook page. It is no different for any service using Web 2.0 tools. So many librarians start out in an experimental mode, but I think the secret hope is to stumble upon a crowd-pleaser with little effort. Essentially, they believe that the technology is the point. But, Web 2.0 is no more the point than any other technology—it’s about the service and what that service means to the community served.

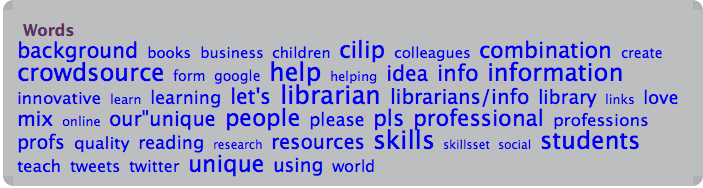

And, service development should start with critical success factors against which impact on the community can be measured. With Web 2.0 tools, the confusion of what and how to evaluate arises from the original objectives of the tools, including how users measure success. For social networking sites like Twitter or Facebook, users measure success by counting the 3Fs: ‘followers’ ‘fans’ ‘friends’. In addition, there are activities such as posts, tagging, ‘likes’, ‘retweets’, online games, and any one of a number of ways users indicate that they are reading, are interested, and want to share.

Librarians also evaluate success in terms of numbers: hits or visits on the webpage, registered users, reserves, etc. However, when they come to services like Facebook or Twitter, it is often difficult to translate the social activities and membership into anything of significance to library service (except for those pages that include local or WorldCat search capability, where searches and access can be counted). I have looked at a number of these pages, and frequently the numbers do not equate to anything meaningful, unless it is accepted that small numbers signify lack of a significant network or interest.

So, if numbers are required as a marker of success, which is often the case for public libraries, then the use of blogs, wikis, and especially social networking services must be very focused: not just to encourage participation but to ensure relevance and success. If we accept that it is the service and its support of users going about their business that should be the focus, and not the gratuitous use of technology because it is new, then what we need to identify is the service, the purpose of the service, and what success looks like.

For instance, the library wants to start a reading group for the housebound: a virtual book group sounds like a good idea, and a number of Web 2.0 tools can support this activity. In this case, critical success factors could include:

• everybody in the book club to be signed on as a friend to a Facebook page;

• a calendar of events to be created and sign up to an RSS feed of events to be encouraged;

• one book discussion meeting a month to be held on Facebook;

• an agreed level of participation that is considered successful (maybe based on how many “show up” for book discussions), etc.

Evaluation is this simple, and it is eminently measurable – a thriving book discussion group on Facebook, which opens this library activity up to the housebound and physically challenged. This is what success looks like for our book discussion club, and it can be measured, whether the days are early or late.

Continued in Part 2