Posted on September 2nd, 2010 by Brian Kelly

Introduction

This seven point checklist presents some steps that creators and managers of community digital archives might take to make sure that their data is available in the long term. It is useful for many circumstances but it will be particularly relevant to community archives that depend on third party suppliers to provide technical infrastructure.

The economic downturn and poor trading conditions mean that some technology providers are unable to continue providing the services upon which community groups have depended. Because hardware, software and services are often very tightly integrated, the failure of a technology company can be very disruptive to its customers. This is especially true if systems are proprietary and customers are ‘locked in’ to particular services, tools or data types. The key message is that community archives need to retain sufficient control of content in order that services can be moved from one service provider to another. Change brought about through insolvency is disruptive and unwelcome: the more control that a group has over content, the less disruptive it will be.

Consideration of the following seven points might help reduce disruption in the event that a content management company withdraws its services.

1. Keep the Masters

Many community groups hold a mix of photographs, sound recordings, video and text in digital form. Some of these are digital copies that have been scanned – such as old photographs, letters: some are ‘born digital’ using digital cameras or digital sound recording equipment. In every case the underlying data will be captured in one of a series of file formats. A simple rule of thumb is that a high quality ‘original’ is retained which has not been processed or edited and that the community group has direct access to this high quality ‘original’ without relying on the content management company.

2. Know What’s What

The rapid proliferation of digital content means that it can be hard to keep track of content – even in a relatively small organisation. Typically a content management company will use a database to catalogue content and then use the database to drive a Web site that makes it available to the public. So, to retain control over content community archives should keep a copy of the catalogue. The database can be complex and even when it is implemented in open source software, it can be proprietary.

The tools used to describe a collection depend on the nature of the collection. For example archives are often described in ‘Encoded Archival Description’ while an images might best be described using the ‘VRA Core’ standard. It’s useful to know a little about the standards that apply in your area.

3. There Should be a Disaster Plan

Most content management companies will have some kind of disaster plan – a backup copy which can be made available in the event of some unforeseen break of service. Good practice means that the content management company should keep multiple copies of data in multiple locations. It is reasonable for a community group to see a copy of the disaster plan and for parts of the disaster plan to be written into the contract between the contractor and the community group. You should ask for evidence that the disaster plan has been tried out and agree how quickly your data would be restored should a disaster occur. It is also reasonable to request or keep a copy of your data for safekeeping, though you may need to plan how and in what format you receive this and you may want to update it periodically.

A common approach to backups is called the ‘Grandfather – Father – Son’ approach. A complete copy is taken every month and stored remotely (Grandfather). A complete copy is taken every week but kept locally (Father) and a daily backup is made of recent changes (Son). The frequency of backups should be dictated by the frequency of changes. Ask your service provider how they approach this.

3. Agree a Succession Plan

A good content management company will also have a succession plan and be willing to involve you in this. Although it is not a happy topic, a shared understanding of rights and expectations of what should happen when either partner is no longer able maintain a contractual relationship can go a long way to reassuring both parties. This is particularly important where a hosting company is employed to deliver content which is not theirs. It is not unreasonable to include a note within the contract clearly identifying that content provided to the hosting company remains the property of the party supplying it and that should there be any break in the contract that the contractor will be obliged to return it. In reality this does not guarantee that you will get content back if a company goes into liquidation but it does secure your right to ask the administrator for it, and if that is not successful then you are then clear about your rights to use the masters and backups which have been lodged with you.

5. Know Your Rights

Rights management can be daunting but it is important to be clear when engaging a third party contractor of the limits of what they are entitled to do with content that a community archive might produce. A good content management contract is likely to give the content management company a licence to distribute content on your behalf for a given period – and it should also specify that technical parts of the service such as software are the property of the content management company. In reality this can be complicated because the community archive may itself be depending on agreements from the actual copyright holders and elements of design and coding will be shared. But so long as you are clear that the content provider will not become the owner of the content once it’s on their site, and that you can terminate their licence after appropriate notice, then it will be easier for you to pass the masters to a new company.

6. Find a Digital Preservation Service

A small number of services exist to look after data for you: either funded as part of existing infrastructure or as a service you can buy. Many local government archives and libraries are developing digital preservation facilities for their own use and might welcome an approach from a community group. Other types of partnership might also make sense: many universities now maintain digital archives for research so it might be useful to talk to a university archivist. Facilities also operate thematically – for example there is a national facility allowing archaeologists to share short reports of excavations. Image and sound libraries may also be able to provide an archival home to data or provide advice, while other services provide digital preservation on a commercial basis. In the same way publishers have started sharing some of their content to reduce their risks and risks to their clients. Having a preservation partner can be very useful for you in the short term and in the long term and will make you a lot more confident that your data will be safe even if the content management company is not around to service it.

7. Put a Copy of your Web Site in a Web Archive

There are a number of services that can make copies of online content before a supplier goes into liquidation. A free service from the British Library called the UK Web Archive exists to ‘harvest’ Web sites in the UK. It can create a simple static copy of your Web site and present this back to you under certain limitations. The UK Web Archive is free but it is based on a recommendation: you need to ask them to take a copy and need to give them permission to do so. But once you’ve given them permission they can harvest the site periodically and so build up a picture of your Web site through time. The UK Web Archive is ideal for relatively static Web sites – but is less good with sites that require passwords, which change quickly or which contain lots of dynamic content. Similar services exist such as the US-based Internet Archive have paid for services that allow users to control the harvesting of content and allow more complicated data types to be managed. Considering the ease of use and how quickly it can gather content, every community archive should consider registering with a service like this as a way to offset the risks of a supplier going into liquidation.

See the briefing paper on Web Archiving for further information [1].

The UK Web Archive is one of a number of services that can make a copy of your Website. So, in the worst case, users can be directed to a version of your site fixed at one point in time [2].

Acknowledgements

This briefing paper was written by William Kilbride of the Digital Preservation Coalition [3].

References

- Web Archiving, Cultural Heritage briefing paper no. 53, UKOLN, <http://www.ukoln.ac.uk/cultural-heritage/documents/briefing-53/>

- UK Web Archive, <http://www.Webarchive.org.uk/>

- Digital Preservation Coalition, <http:// http://www.dpconline.org/>

Filed under: Digital Preservation, Needs-checking | No Comments »

Posted on September 2nd, 2010 by Brian Kelly

Closing Down Blogs

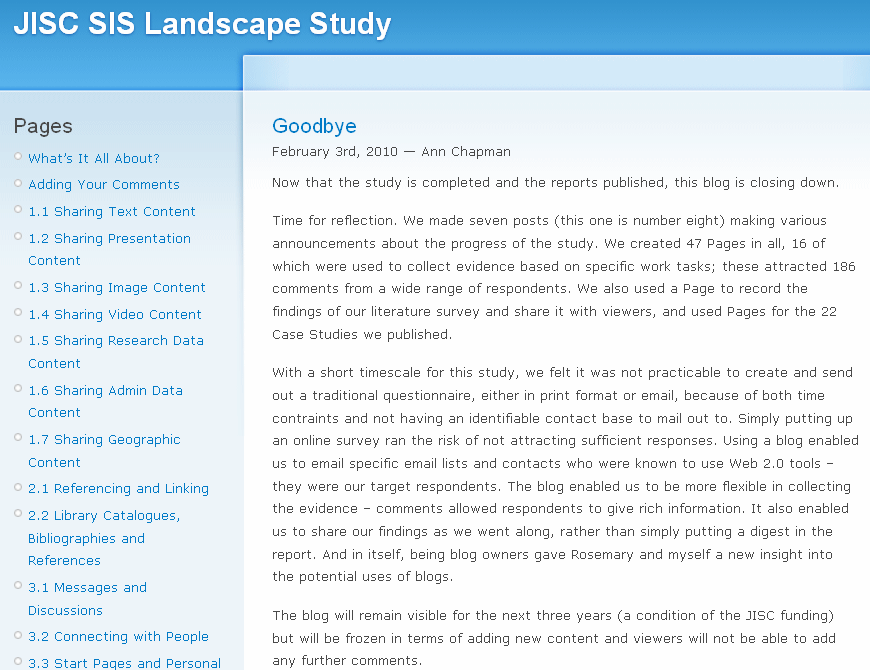

There may be times when there is no longer effort available to continue to maintain a blog. There may also be occasions when a blog has fulfilled its purpose. In such cases there is a need to close the blog in a managed fashion. An example of a project blog provided by UKOLN which was closed in such a managed fashion after the project had finished is the JISC SIS Landscape Study blog. The final blog post [1] is shown below.

Figure 1: Announcement of the Closure of the JISC SIS Landscape Study Blog

The blog post makes it clear to the reader that no new posts will be published and no additional comments can be provided. Summary statistics about the blog are also provided which enables interested parties to have easy access to a summary showing the effectiveness of the blog service.

Reasons For Blog Closure

Blogs may need to be closed for a number of reasons:

- The blog author(s) may find it too time-consuming to continue to maintain a blog or to find ideas to write about or the initial enthusiasm may have waned.

- The blog author(s) may have left the organisation or have moved to other areas of work.

- The blog may not be providing an adequate return on investment.

- A blog may be withdrawn due to policy changes of managerial edict.

- The original purposes for the blog may no longer be relevant.

- Funding to continue to maintain the blog may no longer be available.

Prior to managing the closure of a blog it is advisable to ensure that the reasons for the closure of the blog are well understood and appropriate lessons are learnt.

Possible Approaches

A simple approach to closing a blog is to simple publish a final post giving an appropriate announcement, possibly containing a summary of the achievements of the blog. Comment submissions should be disabled to avoid spam comments being published. This was the approach taken by the JISC SIS Landscape Study blog [1]. [1].

A more draconian approach would be to delete the blog. This will result in the contents of the blog being difficult to find, which may be of concern if useful content has been published. If this approach has to be taken (e.g. if the blog software can no longer be supported or the service is withdrawn) it may be felt desirable to ensure that the contents of the blog are preserved.

Preserving the Contents of the Blog

A Web harvesting tool (e.g. WinTrack) could be used to copy the contents of the blog’s Web site to another location. An alternative approach would be to migrate the content using the log’s RSS feed. If this approach is taken you should ensure that an RSS feed for the complete content is used. A third approach would be to create a PDF resource of the blog site. Further advice is provided at [2].

References

- Goodbye, JISC SIS Landscape Study blog, 3 Feb 2010,

<http://blogs.ukoln.ac.uk/jisc-sis-landscape/2010/02/03/goodbye/>

- The Project Blog When The Project Is Over, UK Web Focus blog, 15 Mar 2010

<http://ukwebfocus.wordpress.com/2010/03/15/the-project-blog-when-the-project-is-over/>

Filed under: Blogs, Needs-checking | No Comments »

Posted on September 2nd, 2010 by Brian Kelly

About Comments On Blogs

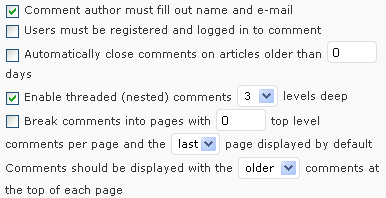

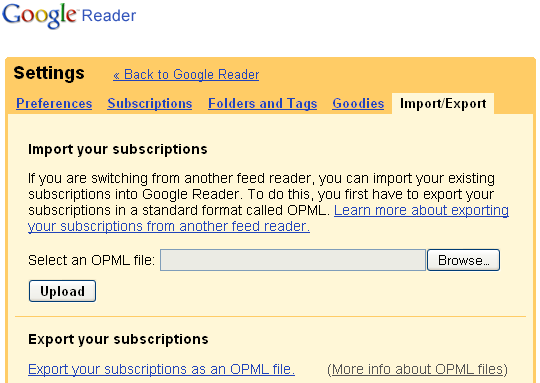

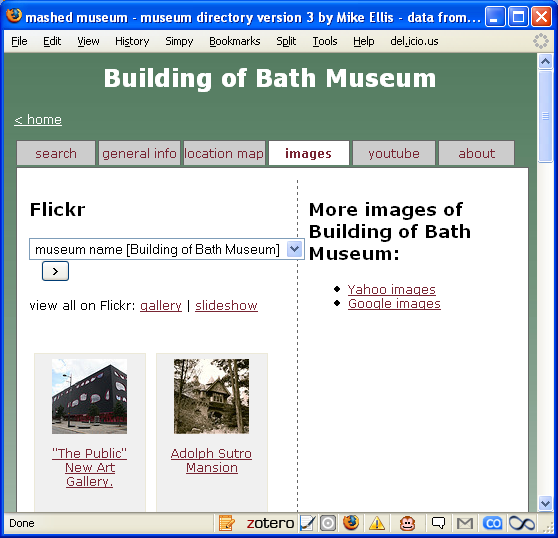

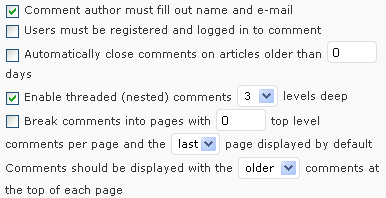

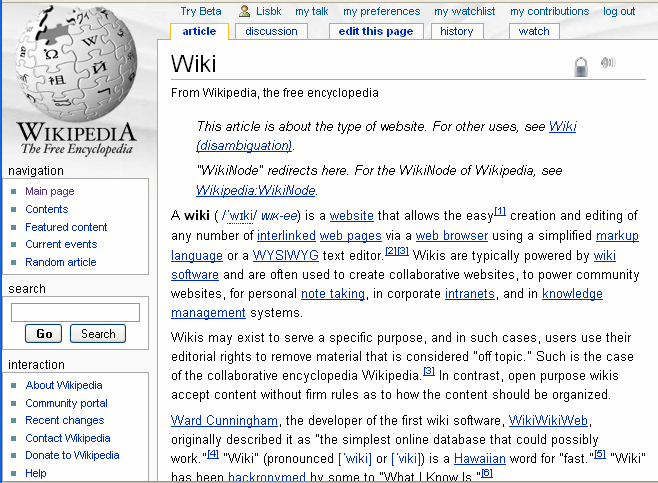

Many blog services allow comments to be made on the blog posts. This facility is normally configurable via the blog owner’s administrator’s interface. An example of the interface in the WordPress blog is shown in Figure 1.

Figure 1: Administrator’s Interface for Blog Comments on WordPress Blog

The Need For A Policy

A policy on dealing with comments made to blog posts is advisable in order to handle potential problems. How should you address the following concerns, for example:

- Your comments are full of spam messages.

- Abusive comments are posted.

- Comments are posted to old messages with content which is no longer relevant.

- Excessive amount of resources are need to manage blog comments.

A blog post and subsequent discussion [1] on the UK Web Focus blog identified a number of views on policies on the moderation of blog comments which are summarised in this briefing document.

Moderated or Unmoderated Blog Comments

A simple response to such concerns might be to require all comments to be approved by the blog moderator. However this policy may hinder the development of a community based around a blog by providing a bottleneck which slows down the display of comments. In a situation in which a blog post is published late on a Friday afternoon, a blog discussion which could take place over the weekend is liked to be stifled by the delayed approval of such comments.

The UK Web Focus blog allows comments to be posted without the need for approval by the blog administrator, although a name and email address do have to be provided. It should be recognised, however, that the lack of a moderation process could mean that automated spam comments are submitted to the blog, thus limiting the effectiveness of the blog and the comment facility. The UK Web Focus blog, however, is hosted on WordPress.com which provides a comment spam filtering service called Akismet. This service has proved very effective in blocking automated spam [2].

Differing Policies for Different Types of Blogs

The policy of moderation of comments to a blog is likely to be dependent on a number of factors such as: (a) the availability of automated spam filtering tools; (b) the effort need to approve comments; (c) the effort needed to remove comments which have failed to be detected by the spam filter; (d) the purpose of the blog and (e) the likelihood that inappropriate comments may be posted.

Publicising Your Policy

It would be helpful for blog owners to make their policies on content moderation clear. An example of a policy can be seen from [3]. It may be useful for your policy to allow for changes in the light of experiences. If you require moderation of comments but find that this hinders submission of comments, you may chose to remove the moderation. However if you find that an unmoderated blog attracts large amount on unwanted comments you may decide to introduce some form of comment moderation.

References

- Moderated Comments? Closed Comments? No Thanks!, UK Web Focus blog, 15 Feb 2010,

<http://ukwebfocus.wordpress.com/2010/02/15/moderated-comments-closed-comments-no-thanks/>

- A Quarter of a Million and Counting, UK Web Focus blog, 6 Jun 2008,

<http://ukwebfocus.wordpress.com/2008/06/08/a-quarter-of-a-million-and-counting/>

- Blog Policies, UK Web Focus blog,

<http://ukwebfocus.wordpress.com/blog-policies/>

Filed under: Blogs | No Comments »

Posted on September 2nd, 2010 by Brian Kelly

About These Documents

This document is 3 of 3 which describe best practices for consuming APIs provided by others.

Clarifying Issues

Certain issues should be clarified before use of an external API. The two key matters for elucidation are data ownership and costing. You should be clear on which items will be owned by the institution or Web author and which will be owned by a third party. You should also be clear on what the charging mechanism will be and the likelihood of change.

These matters will usually be detailed in a terms of use document and the onus is on you as a potential user to read them. If they are not explained you should contact the provider.

Understand Technology Limitations

API providers have technical limitations too and a good understanding of these will help keep your system running efficiently. Think about what will happen when the backend is down or slow and make sure that you cache remote sources aggressively. Try to build some pacing logic into your system. It’s easy to overload a server accidentally, especially during early testing. Ask the service provider if they have a version of the service that can be used during testing. Have plans for whenever an API is down for maintenance or fails. Build in timeouts, or offline updates to prevent a dead backend server breaking your application. Make sure you build in ways to detect problems. Providers are renowned for failing to provide any information as to why they are not working.

Write your application so it stores a local copy of the data so that when the feed fails its can carry on. Make this completely automatic so the system detects for itself whether the feed has failed. However, also provide a way for the staff to know that it has failed. I had one news feed exhibit not update the news for 6 months but no one noticed because there was no error state.

You will also need to be weary of your own technology limitations. Avoid overloading your application with too many API bells and whistles. Encourage and educate end users to think about end-to-end availability and response times. If necessary limit sets of results. Remember to check your own proxy, occasionally data URLs may be temporarily blocked because they come from separate sub-domains.

Other technology tips include remember to register additional API keys when moving servers.

Keep it Simple

When working with APIs it makes sense to start simple and build up. Think about the resources implications of what you are doing. For example build on top of existing libraries: Try and find a supported library for your language of choice that abstracts away from the details of the API. Wrap external APIs, don’t change them as this will be a maintenance nightmare. The exception here is if your changes can be contributed back and incorporated into the next version of the external API. APIs often don’t respond the way you would expect, make sure you don’t inadvertently make another system a required part of your own.

When working with new APIs give yourself time. Not all APIs are immediately usable. Try to ensure that the effort required to learn how to use APIs is costed into your project and ensure the associated risks are on the project’s risk list.

Some Web developers lean towards consuming lean and RESTful APIs however this may not be appropriate for your particular task. SOAP based APIs are generally seen as unattractive as they tend to take longer to develop for than RESTful ones. Client code suffers much more when any change is made to a SOAP API.

Acknowledgements

This document is based on advice provided by UKOLN’s Good APIs project. Further information is available at <http://blogs.ukoln.ac.uk/good-apis-jisc/>.

About The Best Practices For APIs Documents

The Best Practices For APIs series of briefing documents have been published for the cultural heritage sector.

The advice provided in the documents is based on resources gathered by UKOLN for the JISC-funded Good APIs project.

Further information on the Good APIs project is available from the project’s blog at <http://blogs.ukoln.ac.uk/good-apis-jisc/>.

Filed under: APIs, Needs-checking | No Comments »

Posted on September 2nd, 2010 by Brian Kelly

About These Documents

This document is 2 of 3 which describe best practices for consuming APIs provided by others.

Risk Management

When relying on an externally hosted service there can be some element of risk such as loss of service, change in price of a service or performance problems. Some providers may feel the need to change APIs or feeds without notice which may mean that your applications functionality becomes deprecated. This should not stop developers from using these providers but means that you should be cautious and consider providing alternatives for when a service is not (or no longer) available. Developers using external APIs should consider all eventualities and be prepared for change. One approach may be to document a risk management strategy [1] and have a redundancy solution in mind. Another might be to avoid using unstable APIs in mission critical services: bear in mind the organisational embedding of services. Developing a good working relationship with the API supplier wherever possible will allow you to keep a close eye on the current situation and the likelihood of any change.

Provide Documentation

When using an external API it is important to document your processes. Note the resources you have used to assist you, dependencies and workarounds and detail all instructions. Record any strange behaviour or side effects. Ensure you document the version of API your service/application was written for.

Bench mark the APIs you use in order to determine the level of service you can expect to get out of them.

Share Resources and Experiences

It could be argued that open APIs work because people share. Feeding back things you learn to the development community should be a usual step in the development process.

APIs providers benefit from knowing who use their APIs and how they use them. You should make efforts provide clear, constructive and relevant feedback on the code through bug reports), usability and use of APIs you engage with. If it is open source code it should be fairly straightforward to improve an API to meet your needs and in doing so offer options to other users. If you come across a difficulty that the documentation failed to solve then either update the documentation, contact the provider or blog about your findings (and tell the provider). Publish success stories and provide workshops to showcase what has and can been achieved. Sharing means that you can save others time. The benefits are reciprocal. As one developed commented:

“If you find an interesting or unexpected use of a method, or a common basic use which isn’t shown as an example already, comment on its documentation page. If you find that a method doesn’t work where it seems that it should, comment on its documentation page. If you are confused by documentation but then figure out the intended or correct meaning, comment on its documentation page.”

Sharing should also be encouraged internally. Ensure that all the necessary teams in your institution know which APIs are relevant to what services, and that the communications channels are well used. Developers should be keeping an eye on emerging practice; what’s ‘cool’ etc. Share this with your team.

Feedback how and why you are using the API, often service providers are in the dark about who is using their service and why, and being heard can help guide the service to where you need it to be, as well as re-igniting developer interest in pushing on the APIs.

Respect

When using someone else’s software it is important to respect the terms of use. This may mean making efforts to minimise load on the API providers servers or limiting the number of calls made to the service (e.g. by using a local cache or returned data, only refreshed once a given time period has expired). Using restricted examples while developing and testing is a good way to avoid overload the provider’s server. There may also be sensitivity or IPR issues relating to data shared.

Note that caching introduces technical issues. Latency or stale data could be a problem if there is caching.

Acknowledgements

document is based on advice provided by UKOLN’s Good APIs project. Further information is available at <http://blogs.ukoln.ac.uk/good-apis-jisc/>.

References

- Using the Risks and Opportunities Framework, UKOLN briefing document no. 68, <http://www.ukoln.ac.uk/cultural-heritage/documents/briefing-68/>

About The Best Practices For APIs Documents

The Best Practices For APIs series of briefing documents have been published for the cultural heritage sector.

The advice provided in the documents is based on resources gathered by UKOLN for the JISC-funded Good APIs project.

Further information on the Good APIs project is available from the project’s blog at <http://blogs.ukoln.ac.uk/good-apis-jisc/>.

Filed under: APIs, Needs-checking | No Comments »

Posted on September 2nd, 2010 by Brian Kelly

About These Documents

This document is 1 of 3 which describe best practices for consuming APIs provided by others.

Be Careful In Selecting The APIs

Choose the APIs you use carefully. You can find potential APIs by signing up to RSS feeds, registering for email notifications for when new APIs are released, checking forums and searching API directories.

A decision on using an API can be made for a number of reasons (it’s the only one available, we’ve been told to use it, etc.) but developers should check the following:

- That it is the best fit for your needs. There may well be other APIs out there that are more appropriate. Good research is very important as it is more than likely that for popular APIs someone has probably done the hard work and produced a library for your language of choice. That said it is possible that you might have to compromise.

- What the API does. Spend some time finding out.

- How good the documentation is. Check that the documentation correctly matches the API being used. Request sample application code that communicates with the API. Initially commercial software vendors were reluctant to provide good well documented services often only provided simple data transaction services. Good documentation is now accepted as critical.

- That the APIs is connected to a functional description, i.e. an overall description of the function of the entire application.

- That there is a dialogue with the developers such as a forum or email list. This will help establish if there is continued support for bug fixes etc.

- That this API does not clash with each others you are using and will be able to ‘keep in step’.

- That it is a stable API. APIs that are still evolving are liable to change.

- How reliable it is? Some API providers have a better reputation than others.

- How popular it is? Popular APIs tend to have an active user community.

- If it is still managed? APIs which are not currently managed are unlikely to be supported.

- If selecting a product with APIs offered as part of the package ensure you evaluate the APIs too.

- What language has it been coded in?

- Whether a roadmap explaining likely directions of future developments is available.

Study various information sources for each potential API. These could include tutorials, online forums, mailing lists and online magazine articles offering an overview or introduction to the technology as well as the official sources of information. There are also a number of user satisfaction services available such as getsatisfaction [1] or uservoice [2]. The JDocs Web site [3] maintains a searchable collection of Java related APIs and allows use comments to be added to the documentation. You may find that others have encountered problems with a particular API.

Once you have chosen an API it may be appropriate to write a few basic test cases before you begin integration.

If you’re not paying for an API then make sure that the API is part of the provider’s core services which they use themselves. If the provider produces a custom service just for you then if they’re not being paid they have no incentive to keep that API up to date.

As one developer advised:

“When using APIs from others, do a risk assessment. Think about what you want for the future of the application (or part thereof) that will depend on the API, assess its value and the cost of losing it unexpectedly during its intended lifespan, guesstimate how likely it will be that the API will change significantly or become unavailable /useless in that time span. Think about an exit strategy. Consider intermediary libraries if they exist (e.g. for mapping) to allow a ready switch from one API”

Acknowledgements

This document is based on advice provided by UKOLN’s Good APIs project. Further information is available at <http://blogs.ukoln.ac.uk/good-apis-jisc/>.

References

- Getsatisfaction, <http://getsatisfaction.com/>

- Uservoice, <http://uservoice.com/>

- JDocs, <http://www.jdocs.com/>

About The Best Practices For APIs Documents

The Best Practices For APIs series of briefing documents have been published for the cultural heritage sector.

The advice provided in the documents is based on resources gathered by UKOLN for the JISC-funded Good APIs project.

Further information on the Good APIs project is available from the project’s blog at <http://blogs.ukoln.ac.uk/good-apis-jisc/>.

Filed under: APIs | No Comments »

Posted on September 2nd, 2010 by Brian Kelly

Make Sure the API Works

Make your API scalable (i.e. able to cope with a high number of hits), extendable and design for updates. Test your APIs as thoroughly as you would test your user interfaces and where relevant, ensure that it returns valid XML (i.e. no missing or invalid namespaces, or invalid characters).

Embed your API in a community and use them to test it. Use your own API in order to experience how user friendly it is.

As one developer commented:

“Once you have a simple API, use it. Try it on for size and see what works and what doesn’t. Add the bits you need, remove the bits you don’t, change the bits that almost work. Keep iterating till you hit the sweet spot.”

Obtain Feedback On Your API

Include good error logging, so that when errors happen, the calls are all logged and you will be able to diagnose what went wrong:

“Fix your bugs in public”

If possible, get other development teams/projects using your API early to get wider feedback than just the local development team. Engage with your API users and encourage community feedback.

Provide a clear and robust contact mechanism for queries regarding the API. Ideally this should be the name of an individual who could potentially leave the organisation.

Provide a way for users of the API to sign up to a mailing list to receive prior notice of any changes.

As one developer commented:

“An API will need to evolve over time in response to the needs of the people attempting to use it, especially if the primary users of the API were not well defined to begin with.”

Error Handling

Once an API has been released it should be kept static and not be changed. If you do have to change an API maintain backwards compatibility. Contact the API users and warn then well in advance and ask them to get back to you if changes affect the services they are offering. Provide a transitional frame-time with deprecated APIs support. As one developer commented:

“The development of a good set of APIs is very much a chicken-and-egg situation – without a good body of users, it is very hard to guess at the perfect APIs for them, and without a good set of APIs, you cannot gather a set of users. The only way out is to understand that the API development cannot be milestoned and laid-out in a precise manner; the development must be powered by an agile fast iterative method and test/response basis. You will have to bribe a small set of users to start with, generally bribe them with the potential access to a body of information they could not get hold of before. Don’t fall into the trap of considering these early adopters as the core audience; they are just there to bootstrap and if you listen too much to them, the only audience your API will become suitable for is that small bootstrap group.”

Logging the detail of API usage can help identify the most common types of request, which can help direct optimisation strategies. When using external APIs it is best to design defensively: e.g. to cater for situations when the remote services are unavailable or the API fails.

Consider having a business model in place so that your API remains sustainable. As one developer commented:

“Understand the responsibility to users which comes with creating and promoting APIs: they should be stable, reliable, sustainable, responsive, capable of scaling, well-suited to the needs of the customer, well-documented, standards-based and backwards compatible.”

Acknowledgments

This document is based on advice provided by UKOLN’s Good APIs project. Further information is available at <http://blogs.ukoln.ac.uk/good-apis-jisc/>.

About The Best Practices For APIs Documents

The Best Practices For APIs series of briefing documents have been published for the cultural heritage sector.

The advice provided in the documents is based on resources gathered by UKOLN for the JISC-funded Good APIs project.

Further information on the Good APIs project is available from the project’s blog at <http://blogs.ukoln.ac.uk/good-apis-jisc/>.

Filed under: APIs, Needs-checking | No Comments »

Posted on September 2nd, 2010 by Brian Kelly

Provide Documentation

Although a good API should be, by its very nature, intuitive and theoretically not need documentation it is good practice to provide clear useful documentation and examples for prospective developers. This documentation should be well written, clear and full. Inaccurate, inappropriate or documentation of your API is the easiest way to lose users.

Developers should give consideration to including most, if not all, of the following:

- Information on and links to related functions.

- Worked examples and suggestions for use. The examples should be easy to clone, from different programming languages.

- Case studies. Real world examples (e.g. PHP Java Ruby, python etc.)

- Demos – if you want to entice someone to use your API you need good examples that can be re-used quickly. Provide a ‘Getting started’ guide.

- Tutorials and walkthroughs.

- Documentation for less technical developers.

- A trouble shooting guide.

- A reference client/server system that people can code against for testing and possibly access to libraries and example code.

- Opportunities for user feedback, on both the documentation and the API itself

- Migration tips.

- A clear outline of the terms of service of the API. e.g. This is an experimental service, we may change or withdraw this at any time” or “We guarantee to keep this API running until at least January 2012″.

- Any ground rules.

- An appendix with design decisions. Knowing why an API developed the way it did can often help a new developer understand the interface more rapidly.

Good documentation is effectively a roadmap of the API that helps to orientate a new developer quickly. It will allow others to pick up and run with your API. Providing it on release of your API will result in less time spent taking support calls.

Other suggestions include using a mechanism that allows automatic extraction of the comments, such as Javadoc and providing inline documentation that produces Intellisense-type context-sensitive help.

Error Handling

Providing good error handling is essential if you want to give the developers using your API an opportunity to correct their mistake. Error messages should be clear and concise and pitched at the appropriate. Messages such as “Input has failed” are highly unhelpful and unfortunately fairly common. Avoid:

- Inconsistency (e.g. different variable order in similar methods).

- Over-general error reporting (a single exception object covering a number of very different possible errors).

- Over-complicated request payload – having to send a complex session object as part of each Web service call.

Log API traffic with as much context as possible to deal with resolution of errors. Provide permanently addressable status and changelog pages for your API; if the service or API goes down for any reason, these two pages must still be visible, preferably with why things are down.

Provide APIs In Different Languages

A simple Web API is usually REST/HTTP based, with XML delivery of a simple schema e.g. RSS. You may want to offer toolkits for different languages and support a variety of formats (e.g. SOAP, REST, JSON etc.).

Try to provide APIs in XML format then it can also be read by other devices such as kiosks and LED displays. Making returned data available in a number of format (e.g. XML, JSON, PHP encoded array) it saves developers a lot of wasted time parsing XML to make an array.

Provide sample code that uses API in different languages. Try to be general where possible so that one client could be written against multiple systems (even if full functionality is not available without specialization).

For database APIs, provide a variety of output options – different metadata formats and/or levels of detail.

Acknowledgments

This document is based on advice provided by UKOLN’s Good APIs project. Further information is available at <http://blogs.ukoln.ac.uk/good-apis-jisc/>.

Filed under: APIs | No Comments »

Posted on September 2nd, 2010 by Brian Kelly

Seek To Follow Standards

It is advisable to follow standards where applicable. If possible it makes sense to piggy-back on to accepted Web-oriented standards and use well know standards from international authorities: IEEE, W3C, OAI or from successful, established companies. You could refer to the W3C Web Applications Working Group. Where an existing standard isn’t available or appropriate then be consistent, clear, and well-documented.

Although standards are useful and important you should be aware that some standards may be difficult to interpret or not openly available. Understanding the context within which one is operating, the contexts for which particular standards were designed and/or are applicable/appropriate and on that basis making informed decisions about the deployment of those standards.

Use Consistent Naming Structures

Use consistent, self explanatory method names and parameter structures, explicit name for functions and follow naming conventions. For example, similar methods should have arguments in the same order. Developers who fail to use naming conventions may find that their code is difficult to understand, other developers find it difficult to integrate and so go elsewhere. Naming decisions are important and there can be multilingual and cultural issues with understanding names and functionality so check your ideas with other developers.

Make The API Easier To Access

External developers are important, they can potentially add value to your service so you need to make it easy for them to do so and make sure that there is a low barrier to access. The maximum entry requirements should be a login (username and password) which then emails out a link.

If it is for a specific institution and contains what could be confidential information then it will need to contain some form of authentication that can be transmitted in the request.

If you need to use a Web API key make it straightforward to use. You should avoid the bottle neck of user authorisation, an overly complex or non-standard authentication process. One option is publish a key that anyone can use to make test API calls so that people can get started straight away. Another is to provide a copy of the service for developers to use that is separate from your production service. You could provide a developer account, developers will need to test your API so try to be amenable. If you release an open API then it needs to be open.

If possible seek to support Linked Data. Also publish resources that reflect a well-conceived domain model and use URIs that reflect the domain model.

Let Developers Know the API Exists

Making sure that potential users know about your API is vital:

- Contact your development community using email, RSS, Twitter and any other communication mechanisms you have available.

- Write about your API on developer forums. Make sure that you follow this up by having some of your developers monitoring the forum and answering questions.

- If appropriate publish API on Programmable Web.

- Blog about your API.

- Make yourself known. Twitter and chat about APIs with other developers you’ll get a name as a developer and people will be interested when you release APIs.

- Add a “developers” link in the footer of your Web site. If you have released a number of APIs then the developer section of your site a comprehensive microsite with useful documentation.

- Link to working third-party applications that use your API, or third-party libraries that access it.

Version Control

Deal with versioning from the start. Ensure that you add a version number to all releases and keep developers informed. Either commit to keeping APIs the same or embed in version numbers so that applications can continue to use earlier versions of APIs if they change. You could use SourceForge or a version repository to assist.

Acknowledgments

This document is based on advice provided by UKOLN’s Good APIs project. Further information is available at <http://blogs.ukoln.ac.uk/good-apis-jisc/>.

Filed under: APIs | No Comments »

Posted on September 2nd, 2010 by Brian Kelly

About The Best Practices For APIs Documents

This document is the first in a series of four briefing documents which provide advice on the planning processes for the creation of APIs.

The Importance of Planning

As with other activities, design of APIs projects requires effective planning. Rather than just adding an API to an existing service/software and moving straight into coding developers should consider planning, resourcing and managing the creation, release and use of APIs. They need to check that there isn’t already a similar API available before gathering data or developing something new. Then spend time defining requirements and making sure they consider the functionality they want the user to access.

Although formal planning may not always be appropriate in some ‘quick and dirty’ projects some form of prototyping can be very helpful. Some areas that might need consideration are scale, weighing up efficiency and granularity.

Authors who change their specification or don’t produce an accurate specification in the first place may find themselves in trouble later on in a project.

Gathering Requirements

Talking to your users and asking what they would like is just as important in API creation as user interface creation. At times it may be necessary to second-guess requirements but if you have the time it is always more efficient to engage with potential users. Technical people need to ask the user what they are actually after. You could survey a group of developers or ask members of your team.

“The development of a good set of APIs is very much a chicken-and-egg situation – without a good body of users, it is very hard to guess at the perfect APIs for them, and without a good set of APIs, you cannot gather a set of users. The only way out is to understand that the API development cannot be milestoned and laid-out in a precise manner; the development must be powered by an agile fast iterative method and test/response basis. You will have to bribe a small set of users to start with, generally bribe them with the potential access to a body of information they could not get hold of before. Don’t fall into the trap of considering these early adopters as the core audience; they are just there to bootstrap and if you listen too much to them, the only audience your API will become suitable for is that small bootstrap group.”

Make The APIs Useful

When creating an API look at it both from your own perspective and from a user’s perspective, offer something that can add value or be used in many different ways. One option is to consider developing a more generic application from the start as it will open up the possibilities for future work. Anticipate common requests and optimise your API accordingly. Open up the functions you’re building.

Get feedback from others on how useful it is. Consider different requirements of immediate users and circumstances against archival and preservation requirements.

Collaborating on any bridges and components is a good way to help developers tap into other team knowledge and feedback.

Keep It Simple

The adage “complex things tend to break and simple things tend to work” has been fairly readily applied to the creation of Web APIs. Although simplicity is not always the appropriate remedy, for most applications it is the preferred approach. APIs should be about the exposed data rather than application design.

Keep the specifications simple, especially when you are starting out. Documenting what you plan to do will also help you avoid scope creep. Avoid having too many fields and too many method calls. Offer simplicity, or options with simple or complex levels.

Developers should consider only adding API features if there is a provable extension use case. One approach might be to always ask “do we actually need to expose this via our API?“.

Make It Modular

It is better to create an API that has one function and does it well rather than an API that does many things. Good programming is inherently modular. This allows for easier reuse and sustains a better infrastructure.

The service should define itself and all methods available. This means as you add new features to the API, client libraries can automatically provide interfaces to those methods without needing new code.

As one developer commented:

“It is not enough to put a thin layer on top of a database and provide a way to get data from each table separately. Many common pieces of information can only be retrieved in a useful way by relating data between tables. A decent API would seek to make retrieving commonly-related sets of data easy.”

Acknowledgments

This document is based on advice provided by UKOLN’s Good APIs project. Further information is available at <http://blogs.ukoln.ac.uk/good-apis-jisc/>.

Filed under: APIs, Needs-checking | No Comments »

Posted on September 2nd, 2010 by Brian Kelly

What Is Podcasting?

Podcasting has been described as “a method of publishing files to the internet, often allowing users to subscribe to a feed and receive new files automatically by subscription, usually at no cost.” [1].

Podcasting is a relatively new phenomena becoming popular in late 2004. Some of the early adopters regard Podcasting as a democratising technology, allowing users to easily create and publish their own radio shows which can be easily accessed within the need for a broadcasting infrastructure. From a technical perspective, Podcasting is an application of the RSS 2.0 format [2]. RSS can be used to syndicate Web content, allowing Web resources to be automatically embedded in third party Web sites or processed by dedicated RSS viewers. The same approach is used by Podcasting, allowing audio files (typically in MP3 format) to be automatically processed by third party applications – however rather than embedding the content in Web pages, the audio files are transferred to a computer hard disk or to an MP3 player – such as an iPod.

The strength of Podcasting is the ease of use it provides rather than any radical new functionality. If, for example, you subscribe to a Podcast provided by the BBC, new episodes will appear automatically on your chosen device – you will not have to go to the BBC Web site to see if new files are available and then download them.

Note that providing MP3 files to be downloaded from Web sites is sometimes described as Podcasting, but the term strictly refers to automated distribution using RSS.

What Can Podcasting Be Used For?

There are several potential applications for Podcasting in an educational context:

- Maximising the impact of talks by allowing seminars, lectures, conference presentations, etc. to be listened to by a wider audience.

- Recording of talks allowing staff to easily access staff developments sessions and meetings as a revision aid, to catch up on missed lectures, etc.

- Automated conversion of text files, email messages, RSS feeds, etc. to MP3 format, allowing the content to be accessed on mobile MP3 players.

- Maximising the impact of talks by allowing seminars, lectures, conference presentations, etc. to be listened to by a wider audience.

- Recordings of meetings to provide access for people who could not attend.

- Enhancing the accessibility of talks to people with disabilities.

Possible Problems

Although there is much interest in the potential for Podcasting, there are potential problem areas which will need to be considered:

- Recording lectures, presentations, etc. may infringe copyright or undermine the business model for the copyright owners.

- Making recordings available to a wider audience could mean that comments could be taken out of context or speakers may feel inhibited when giving presentations.

- The technical quality of recordings may not be to the standard expected.

- Although appealing to the publisher, end users may not make use of the Podcasts.

It would be advisable to seek permission before making recordings or making recordings available as Podcasts.

Podcasting Software

Listening To Podcasts

It is advisable to gain experiences of Podcasting initially as a recipient, before seeking to create Podcasts. Details of Podcasting software is given at [3] and [4]. Note that support for Podcasts in iTunes v. 5 [5] has helped enhance the popularity of Podcasts. You should note that you do not need a portable MP3 player to listen to Podcasts – however the ability to listen to Podcasts while on the move is one of its strengths.

Creating Podcasts

When creating a Podcast you first need to create your MP3 (or similar) audio file. Many recording tools are available, such as the open source Audacity software [6]. You may also wish to make use of audio editing software to edit files, include sound effects, etc.

You will then need to create the RSS file which accompanies your audio file, enabling users to subscribe to your recording and automate the download. An increasing number of Podcasting authoring tools and Web services are being developed [7] .

References

- Podcasting, Wikipedia,

<http://en.wikipedia.org/wiki/Podcasting>

- RSS 2.0, Wikipedia,

<http://en.wikipedia.org/wiki/Really_Simple_Syndication>

- iPodder Software,

<http://www.ipodder.org/directory/4/ipodderSoftware>

- iTunes – Podcasting,

<http://www.apple.com/podcasting/>

- Podcasting Software (Clients), Podcasting News,

<http://www.podcastingnews.com/topics/Podcast_Software.html>

- Audacity,

<http://audacity.sourceforge.net/>

- Podcasting Software (Publishing), Podcasting News,

<http://www.podcastingnews.com/topics/Podcasting_Software.html>

Filed under: Web 2.0 | No Comments »

Posted on September 2nd, 2010 by Brian Kelly

Background

This document provides an introduction to microformats, with a description of what microformats are, the benefits they can provide and examples of their usage. In addition the document discusses some of the limitations of microformats and provides advice on best practices for use of microformats.

What Are Microformats?

“Designed for humans first and machines second, microformats are a set of simple, open data formats built upon existing and widely adopted standards. Instead of throwing away what works today, microformats intend to solve simpler problems first by adapting to current behaviors and usage patterns (e.g. XHTML, blogging).” [1].

Microformats make use of existing HTML/XHTML markup: Typically the <span> and <div> elements and class attribute are used with agreed class name (such as vevent, dtstart and dtend to define an event and its start and end dates). Applications (including desktop applications, browser tools, harvesters, etc.) can then process this data.

Examples Of Microformats

Popular examples of microformats include:

- hCard: Markup for contact details such as name, address, email, phone no., etc. Browser tools such as Tails Export [2] allow hCard microformats in HTML pages to be added to desktop applications (e.g. MS Outlook).

- hCalendar: Markup for events such as event name, date and time, location, etc. Browser tools such as Tails Export and Google hCalendar [3] allow hCalendar microformats in HML pages to be added to desktop calendar applications (e.g. MS Outlook) and remote calendaring services such as Google Calendar.

An example which illustrates commercial takeup of the hCalendar microformat is Yahoo’s Upcoming service [4]. This service allows registered users to provide information about events. This information is stored in hCalendar format, allowing the information to be easily added to a local calendar tool.

Limitations Of Microformats

Microformats have been designed to make use of existing standards such as HTML. They have also been designed to be simple to use and exploit. However such simplicity means that microformats have limitations:

- Possible conflicts with the Semantic Web approach: The Semantic Web seeks to provide a Web of meaning based on a robust underlying architecture and standards such as RDF. Some people feel that the simplicity of microformats lacks the robustness promised by the Semantic Web.

- Governance: The definitions and ownership of microformats schemes (such as hCard and hCalendar) is governed by a small group of microformat enthusiasts.

- Early Adopters: There are not yet well-established patterns of usage, advice on best practices or advice for developers of authoring, viewing and validation tools.

Best Practices for Using Microformats

Despite their limitations microformats can provide benefits to the user community. However in order to maximise the benefits and minimise the risks associated with using microformats it is advisable to make use of appropriate best practices. These include:

- Getting it right from the start: Seek to ensure that microformats are used correctly. Ensure appropriate advice and training is available and that testing is carried out using a range of tools. Discuss the strengths and weaknesses of microformats with your peers.

- Having a deployment strategy: Target use of microformats in appropriate areas. For example, simple scripts could allow microformats to be widely deployed, yet easily managed if the syntax changes.

- Risk management: Have a risk assessment and management plan which identifies possible limitations of microformats and plans in case changes are needed [5].

References

- About Microformats, Microformats.org,

<http://microformats.org/about/>

- Tails Export: Overview, Firefox Addons,

<https://addons.mozilla.org/firefox/2240/>

- Google hCalendar,

<http://greasemonkey.makedatamakesense.com/google_hcalendar/>

- Upcoming, Yahoo!,

<http://upcoming.yahoo.com/>

- Risk Assessment For The IWMW 2006 Web Site, UKOLN,

<http://www.ukoln.ac.uk/web-focus/events/workshops/webmaster-2006/risk-assessment/#microformats>

Filed under: Web 2.0 | No Comments »

Posted on September 2nd, 2010 by Brian Kelly

Background

This briefing document provides advice for Web authors, developers and policy makers who are considering making use of Web 2.0 services which are hosted by external third party services. The document describes an approach to risk assessment and risk management which can allow the benefits of such services to be exploited, whilst minimising the risks and dangers of using such services.

Note that other examples of advice are also available [1] [2].

About Web 2.0 Services

This document covers use of third party Web services which can be used to provide additional functionality or services without requiring software to be installed locally. Such services include:

- Search facilities, such as Google University Search and Atomz.

- Social bookmarking services, such as del.icio.us.

- Wiki services, such as WetPaint.

- Usage analysis services, such Google Analytics and SiteMeter.

- Chat services such as Gabbly and ToxBox.

Advantages and Disadvantages

Advantages of using such services include:

- May not require scarce technical effort.

- Facilitates experimentation and testing.

- Enables a diversity of approaches to be taken.

Possible disadvantages of using such services include:

- Potential security and legal concerns e.g. copyright, data protection, etc.

- Potential for data loss or misuse.

- Reliance on third parties with whom there may be no contractual agreements.

Risk Management and Web 2.0

Examples of risks and risk management approaches are given below.

| Risk |

Assessment |

Management |

| Loss of service (e.g. company becomes bankrupt, closed down, …) |

Implications if service becomes unavailable.

Likelihood of service unavailability. |

Use for non-mission critical services.

Have alternatives readily available.

Use trusted services. |

| Data loss |

Likelihood of data loss.

Lack of export capabilities. |

Evaluation of service.

Non-critical use.

Testing of export. |

Performance problems.

Unreliability of service. |

Slow performance |

Testing.

Non-critical use. |

| Lack of interoperability. |

Likelihood of application lock-in.

Loss of integration and reuse of data. |

Evaluation of integration and export capabilities. |

| Format changes |

New formats may not be stable. |

Plan for migration or use on a small-scale. |

| User issues |

User views on services. |

Gain feedback. |

Note that in addition to risk assessment of Web 2.0 services, there is also a need to assess the risks of failing to provide such services.

Example of a Risk Management Approach

A risk management approach [3] was taken to use of various Web 2.0 services on the Institutional Web Management Workshop 2009 Web site.

- Use of established services:

- Google and Google Analytics are used to provide searching and usage reports.

- Alternatives available:

- Web server log files can still be analysed if the hosted usage analysis services become unavailable.

- Management of services:

- Interfaces to various services were managed to allow them to be easily changed or withdrawn.

- User Engagement:

- Users are warned of possible dangers and invited to engage in a pilot study.

- Learning:

- Learning may be regarded as the aim, not provision of long term service.

<!–

- Agreements:

- An agreement has been made for the hosting of a Chatbot service.

–>

References

- Checklist for assessing third-party IT services, University of Oxford,

<http://www.oucs.ox.ac.uk/internal/3rdparty/checklist.xml>

- Guidelines for Using External Services, University of Edinburgh,

<https://www.wiki.ed.ac.uk/download/attachments/8716376/GuidelinesForUsingExternalWeb2.0Services-20080801.pdf?version=1>

- Risk Assessment, IWMW 2006, UKOLN,

<http://iwmw.ukoln.ac.uk/iwmw2009/risk-assessment/>

Filed under: Needs-checking, Risk Management | No Comments »

Posted on September 2nd, 2010 by Brian Kelly

About this document

Performance and reliability are the principal criteria for selecting software. In most procurement exercises however, price is also a determining factor when comparing quotes from multiple vendors. Price comparisons do have a role, but usually not in terms of a simple comparison of purchase prices. Rather, price tends to arise when comparing “total cost of ownership” (TCO), which includes both the purchase price and ongoing costs for support (and licence renewal) over the real life span of the product. This document provides tips about selecting open source software.

The Top Tips

- Consider The Reputation

- Does the software have a good reputation for performance and reliability? Here, word of mouth reports from people whose opinion you trust is often key. Some open source software has a very good reputation in the industry, e.g. Apache Web server, GNU Compiler Collection (GCC), Linux, Samba, etc. You should be comparing “best of breed” open source software against its proprietary peers. Discussing your plans with someone with experience using open source software and an awareness of the packages you are proposing to use is vital.

- Monitor Ongoing Effort

- Is there clear evidence of ongoing effort to develop the open source software you are considering? Has there been recent work to fix bugs and meet user needs? Active projects usually have regularly updated web pages and busy development email lists. They usually encourage the participation of those who use the software in its further development. If everything is quiet on the development front, it might be that work has been suspended or even stopped.

- Look At Support For Standards And Interoperability

- Choose software which implements open standards. Interoperability with other software is an important way of getting more from your investment. Good software does not reinvent the wheel, or force you to learn new languages or complex data formats.

- Is There Support From The User Community?

- Does the project have an active support community ready to answer your questions concerning deployment? Look at the project’s mailing list archive, if available. If you post a message to the list and receive a reasonably prompt and helpful reply, this may be a sign that there is an active community of users out there ready to help. Good practice suggests that if you wish to avail yourself of such support, you should also be willing to provide support for other members of the community when you are able.

- Is Commercial Support Available?

- Third party commercial support is available from a diversity of companies, ranging from large corporations such as IBM and Sun Microsystems, to specialist open source organizations such as Red Hat and MySQL, to local firms and independent contractors. Commercial support is most commonly available for more widely used products or from specialist companies who will support any product within their particular specialism.

- Check Versions

- When was the last stable version of the software released? Virtually no software, proprietary or open source, is completely bug free. If there is an active development community, newly discovered bugs will be fixed and patches to the software or a new version will be released. For enterprise use, you need the most recent stable release of the software, be aware that there may have been many more recent releases in the unstable branch of development. There is, of course, always the option of fixing bugs yourself, since the source code of the software will be available to you. But that rather depends on your (or your team’s) skill set and time commitments.

- Think Carefully About Version 1.0

- Open source projects usually follow the “release early and often” motto. While in development they may have very low version numbers. Typically a product needs to reach its 1.0 release prior to being considered for enterprise use. (This is not to say that many pre-”1.0″ versions of software are not very good indeed, e.g. Mozilla’s 0.8 release of its Firefox browser).

- Check The Documentation

- Open source software projects may lag behind in their documentation for end users, but they are typically very good with their development documentation. You should be able to trace a clear history of bug fixes, feature changes, etc. This may provide the best insight into whether the product, at its current point in development, is fit for your purposes.

- Do You Have The Required Skill Set?

- Consider the skill set of yourself and your colleagues. Do you have the appropriate skills to deploy and maintain this software? If not, what training plan will you put in place to match your skills to the task? Remember, this is not simply true for open source software, but also for proprietary software. These training costs should be included when comparing TCOs for different products.

- What Licence Is Available?

- Arguably, open source software is as much about the license as it is about the development methodology. Read the licence. Well-known licenses such as the General Public License (GPL) and the Lesser General Public License (LGPL) have well defined conditions for your contribution of code to the ongoing development of the software or the incorporation of the code into other packages. If you are not familiar with these licenses or with the one used by the software you are considering, take the time to clarify conditions of use.

- What Functionality Does The Software Provide?

- Many open source products are generalist and must be specialised before use. Generally speaking the more effort required to specialise a product, the greater is its generality. A more narrowly focused product will reduce the effort require to deploy it, but may lack flexibility. An example of the former is GNU Compiler Collection (GCC), and an example of the latter might be Evolution email client, which works well “out of the box” but is only suitable for the narrow range of tasks for which it was intended.

Further Information

Acknowledgements

This document was written by Randy Metcalfe of OSS Watch. OSS Watch is the open source software advisory service for UK higher and further education. It provides neutral and authoritative guidance on free and open source software, and about related open standards.

The OSS Watch Web site ia available at http://www.oss-watch.ac.uk/.

Filed under: Needs-checking, Software | No Comments »

Posted on September 2nd, 2010 by Brian Kelly

Introduction

A Risks and Opportunities Framework for exploiting the potential of innovation such as the Social Web has been developed by UKOLN [1]. This approach has been summarised in a briefing document [2] [2]. This briefing document provides further information on the processes which can be used to implement the framework.

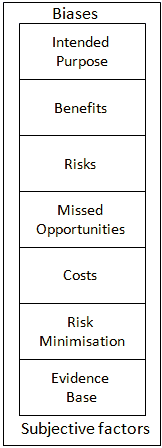

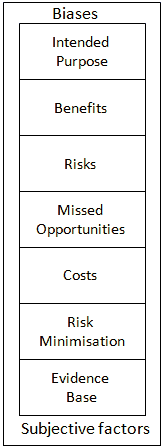

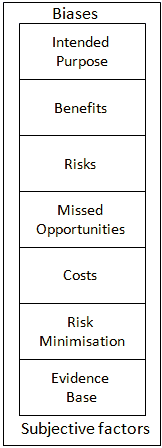

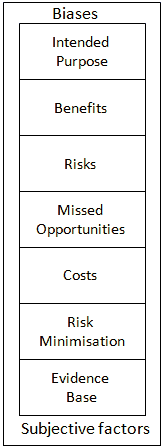

The Risks and Opportunities Frame

The Risks and Opportunities Framework aims to facilitate discussions and decision-making when use of innovative services is being considered.

The Risks and Opportunities Framework aims to facilitate discussions and decision-making when use of innovative services is being considered.

As illustrated, a number of factors should be addressed in the planning processes for the use of innovative new services, such as use of the Social Web. Further information on these areas is given in [2].

Critical Friends

A ‘Critical Friends’ approach to addressing potential problems and concerns in the development of innovative services is being used to JISC to support its funding calls. As described on the Critical Friends Web site [3]:

The Critical Friend is a powerful idea, perhaps because it contains an inherent tension. Friends bring a high degree of unconditional positive regard. Critics are, at first sight at least, conditional, negative and intolerant of failure.

Perhaps the critical friend comes closest to what might be regarded as ‘true friendship’ – a successful marrying of unconditional support and unconditional critique.

The Critical Friends Web site provides a set of Effective Practice Guidelines [4] for Critical Friends, Programme Sponsors and Project Teams.

A successful Critical Friends approach will ensure that concerns are raised and addressed in an open, neutral and non-confrontational way.

Risk Management and Minimisation

It is important to acknowledge that there may be risks associated with the deployment of new services and to understand what those risks might be. As well as assessing the likelihood of the risks occurring and the significance of such risks there will be a need to identify ways in which such risks can be managed and minimised.

It should b noted that risk management approaches might include education, training and staff development as well technical development. It should also be recognised that if may be felt that risks are sometimes worth taking.

Gathering Evidence

The decision-making process can be helped if it is informed by evidence. Use of the Risks and Opportunities Framework is based on documentation of intended uses of the new service, perceived risks and benefits, costs and resource implications and approaches for risk minimisation. Where possible the information provided in the documentation should be linked to accompanying evidence.

In a rapidly changing technical environment with changing user needs and expectations there will be a need to periodically revisit evidence in order to ensure that significant changes have not taken place which may influence decisions which have been made.

Using The Framework

A template for use of the framework is summarised below:

| Area |

Summary |

Evidence |

| Intended Use |

Specific examples of the intended use of the service. |

Examples of similar uses by one’s peers. |

| Benefits |

Description of the benefits for the various stakeholders. |

Evidence of benefits observed in related uses. |

| Risks |

Description of the risks for the various stakeholders. |

Evidence of risks entailed in related uses. |

| Missed Opportunities |

Description of the risks in not providing the service. |

Evidence of risks entailed by peers who failed to innovate. |

| Costs |

Description of the costs for the various stakeholders. |

Evidence of costs encountered by one’s peers. |

| Risk Minimisation |

Description of the costs for the various stakeholders. |

Evidence of risk minimisation approaches taken by others. |

References

- Time To Stop Doing and Start Thinking: A Framework For Exploiting Web 2.0 Services, Kelly, B., Museums and the Web 2009: Proceedings,

<http://www.ukoln.ac.uk/web-focus/papers/mw-2009/>

- A Risks and Opportunities Framework for the Social Web, UKOLN Cultural Heritage briefing document no. 67,

<http://www.ukoln.ac.uk/cultural-heritage/documents/briefing-67/>

- Critical Friends Network,

<http://www.critical-friends.org/>

- Guidelines for Effective Practice, Critical Friends Network,

<http://www.critical-friends.org/daedalus/cfpublic.nsf/guidelines?openform>

Filed under: Needs-checking, Risk Management | No Comments »

Posted on September 2nd, 2010 by Brian Kelly

Introduction

In today’s environment of rapid technological innovation and changing user expectations coupled with financial pressures it is no longer possible for cultural heritage organisations to develop networked services without being prepared to take some risks [1]. The challenge is how to assess such risks prior to making policy decision as to whether the organisation is willing to take such risks.

This briefing document described a framework which aims to support the decision-making process in the content of possible use of the Social Web.

Assessing Risks

Risks should be assessed within the context of use. This context will include the intended purpose of the service, the benefits which the new service are perceived to bring to the various stakeholders and the costs and other resource implications of the deployment and use of the service.

Assessing Missed Opportunities

In addition to assessing the risks of use of a new service there is also a need to assess the risk of not using the new service – the missed opportunity costs. Failing to exploit a Social Web service could result in a loss of a user community or a failure to engage with new potential users. It may be the risks of failing to innovate could be greater than the risks of doing nothing.

Risk Management and Minimisation

It is important to acknowledge that there may be risks associated with the deployment of new services and to understand what those risks might be. As well as assessing the likelihood of the risks occurring and the significance of such risks there will be a need to identify ways in which such risks can be managed and minimised.

It should b noted that risk management approaches might include education, training and staff development as well technical development. It should also be recognised that if may be felt that risks are sometimes worth taking.

The Risks and Opportunities Framework

The Risks and Opportunities Framework was first described in a paper on “Time To Stop Doing and Start Thinking: A Framework For Exploiting Web 2.0 Services” presented at the Museums and the Web 2009 conference [2] and further described at [3].

This framework aims to facilitate discussions and decision-making when use of Social Web service is being considered.

This framework aims to facilitate discussions and decision-making when use of Social Web service is being considered.

The components of the framework are:

- Intended use

- Rather than talking about services in an abstract context (“shall we have a Facebook page“) specific details of the intended use should be provided.

- Perceived benefits

- A summary of the perceived benefits which use of the Social Web service are expected to provide should be documented.

- Perceived risks

- The perceived risks which use of the Social Web service may entail should be documented.

- Missed opportunities

- A summary of the missed opportunities and benefits which a failure to make use of the Social Web service should be documented.

- Costs

- A summary of the costs and other resource implications of use of the service should be documented.

- Risk minimisation

- Once the risks have been identified and discussed approaches to risk minimisation should be documented.

- Evidence base

- Evidence which back up assertions made in use of the framework.

References

- Risk Management InfoKit, JISC infoNET,

<http://www.jiscinfonet.ac.uk/InfoKits/risk-management>

- Time To Stop Doing and Start Thinking: A Framework For Exploiting Web 2.0 Services, Kelly, B., Museums and the Web 2009: Proceedings,

<http://www.ukoln.ac.uk/web-focus/papers/mw-2009/>

- Further Developments of a Risks and Opportunities Framework, Kelly, B., UK Web Focus blog, 16 April 2009,

<http://ukwebfocus.wordpress.com/2009/04/16/further-developments-of-a-risks-and-opportunities-framework/>

Filed under: Risk Management | No Comments »

Posted on September 2nd, 2010 by Brian Kelly

Introduction

The document An Introduction to the Mobile Web [1] explains how increasing use of mobile devices offers institutions and organisations many opportunities for allowing their resources to be used in exciting new ways. This innovation relates in part to the nature of mobile devices (their portability, location awareness and abundance) but also to the speed and ease with which new applications can be created for them. Some of the current complimentary technologies are described below.

QR Codes

Quick Response (QR) codes are two-dimensional barcodes (matrix codes) that allow their contents to be decoded at high speed. They were created by Japanese corporation Denso-Wave in 1994 and have been primarily used for tracking purposes but have only recently filtered into mainstream use with the creations of applications that allow them to be read by mobile phone cameras. For further information see An Introduction to QR Codes [2].

Location Based Services (GPS)

More mobile phones are now being sold equipped with global Positioning System (GPS) chips. GPS, which uses a global navigation satellite system developed in the US, allows the device to provide pinpoint data about location.

Mobile GPS still has a way to go to become fully accurate when pinpointing locations but the potential of this is clear. GPS enabled devices serve as a very effective navigational aid and maps may eventually become obsolete. Use of GPS offers many opportunities for organisations to market their location effectively.

SMS Short Codes

Instant is already used by consumers in a multitude of ways, for example to vote, enter a competition or answer a quiz. In the future organisations could set up SMS short codes allowing their users to:

- Express an interest in a product or service or request a brochure

- Request a priority call back

- Receive picture, music, or video content

- Receive search results

- Receive a promotional voucher

- Pay for goods or services

- Engage in learning activities

Bluetooth and Bluecasting

Bluetooth is an open wireless protocol for exchanging data over short distances from fixed and mobile devices. Bluecasting is the provision of any media for Bluetooth use. Organisations could offer content to users who opt-in by making their mobile phones discoverable.

Cashless Financial Transactions

Using Paypal it is now possible to send money to anyone with and email address or mobile phone number. Paying using SMS is becoming more common, for example to pay for car parking. In the future people will be able to use the chip in their phone to make contactless payments at the point of sale by waving it across a reader.

The Future